Micro Front Ends — Doing it Angular Style Part 2

Micro Front Ends — Doing it Angular Style Part 2

In the previous part, I talked about the motivations for moving towards an MFE solution and some of the criteria for a solution to be relevant. In this part, Ill get into how we implemented it at Outbrain.

As I mentioned in the previous part, one of the criteria was for a solution that can integrate with our current technological echo system and require little or no changes to the applications we currently maintain.

Enter Angular Lazy Loading Feature Modules

Angular has a built-in concept of modules, which are basically declaration objects that specify all the components, directives, services and other modules that are encapsulated in a module.

@NgModule({

imports: [CommonModule],

declarations: [ WelcomeComponent],

bootstrap: [],

entryComponents: []

})

export class AppB_Module {}

By specifying the module file as a Webpack entry point, this provided us with the ability to bundle up the entire Angular module, including css, and html as a single standalone js file.

entry: {

'appB_module': './app/appB.prod.module.ts'

}

Using Angular lazy loading mechanism, we can dynamically load this js file and bootstrap in into our current application.

const routes: Routes = [

{

path: appB,

loadChildren: '/appB/appB_Module#AppB_Module'

}

]

This is a big step towards our goal of separating our application into a mini application.

Moving from feature modules to mini apps

Angular feature modules along with Webpack bundling gives us the code separation we need, but this is not what we enough, since Webpack only allows us to create bundles as part of a single build process, what we want to be able to produce a separate JS bundle, that is built at a different time, from a separate code base in a separate build system that can be loaded into the application at runtime and share any common resources, such as Angular.

In order to resolve this, we had to create our own Webpack loader which is called share-loader.

Share-loader allows us to specify a list of modules that we would like to share between applications, it will bundle a given module into one of the applications js bundle, and provide a namespace in which other bundles access that modules.

Application A web pack.config:

rules: [

{

test: /\.js?$/,

use: [{

loader: 'share-loader',

options: {

modules: [/@angular/, /@lodash/],

namespace: 'container-app'

}

}]

}

Application B webpack.json

const {Externals} = require('share-loader');

externals: [

Externals({

namespace: 'container-app',

modules: [/@angular/, /@lodash/]

})

],

output: {

library: 'appB',

libraryTarget: 'umd'

},

In this example, we are telling Webpack to bundle angular and lodash into application A and expose it under the ‘container-app’ namespace.

In application B, we are defining that angular and lodash will not be bundled but rather be pointed to by the namespace ‘container-app’.

This way, we can share some modules across applications but maintain others that we wish not to share.

So far we have tackled several of the key’s we specified in the previous post, We now have two application that can be run independently or loaded remotely at runtime while wrapped in a js namespace and have CSS and HTML encapsulation, They can also share modules between then and encapsulate modules that shouldn’t be shared, now lets look into some of the other key’s we mentioned.

DOM encapsulation

In order to tackle CSS encapsulation we wrapped each mini-app with a generic angular component, this component uses angular CSS encapsulation feature, we have two options, we can use either emulated mode or native mode depending on the browser support we require, either way, we are sure that our CSS will not leak out.

@Component({

selector: 'ob-externals-wrapper',

template: require('./externals-wrapper.component.pug')(),

styleUrls: ['./externals-wrapper.component.less'],

encapsulation: ViewEncapsulation.Native

})

This wrapper component also serves as a communication layer between each mini-app and the other apps. all communication is done via an event bus instance that is hosted by each wrapper instance, by using an event system we have a decoupled way to communicate data in and out, which we can easily clear when a mini application is cleared from the main application.

If we take a look at the situation we have so far, we can see that we have a solution that is very much inline with the web component concept, each mini application is wrapped by a standalone component, that encapsulates all js html and css, and all communication is done by an event system.

Testing

Since each application can also run independently we can run test suites on each one independently, this means each application owner knows when his changes have broken the application and each team is concerned mostly with their own application.

Deployment and serving

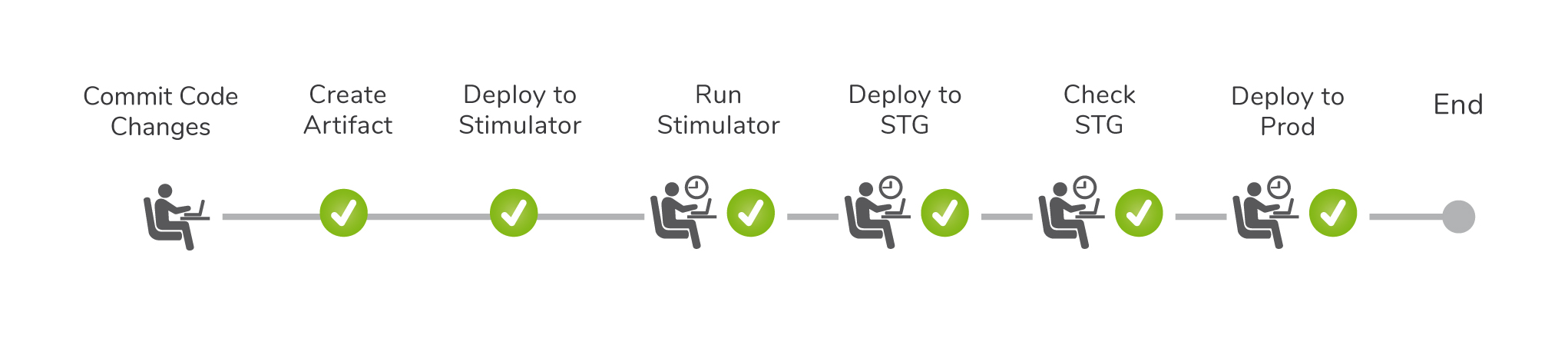

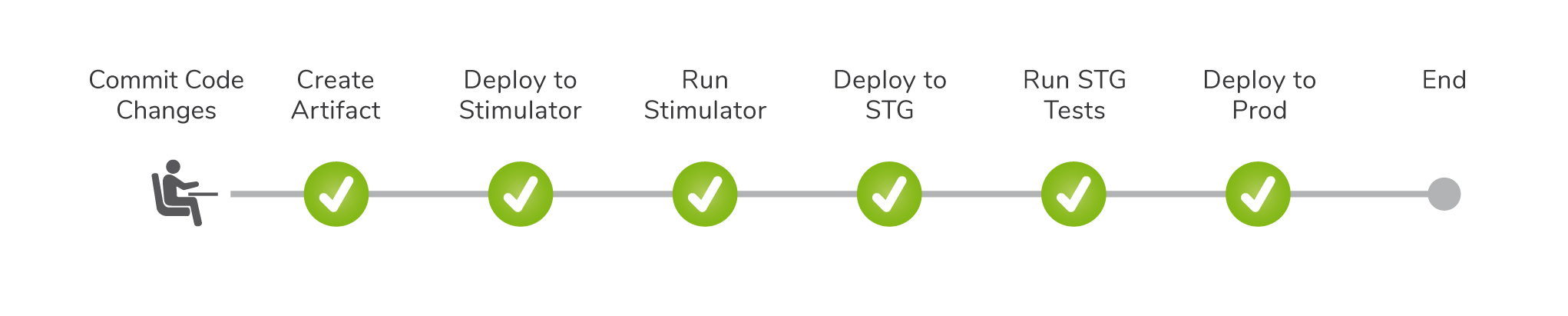

In order to provide each application with its own deployment, we created a node service for each application, each time a team created a new deployment of their application a js bundle is created that encapsulates the application, each service exposes an endpoint that returns the path to the bundle. At runtime, when a mini app is loaded into the container app, a call to the endpoint is made and the js file is loaded to the app and bootstrapped to the main application. This way each application can be built a deployed separately

Closing Notes:

Thanks for reading! I hope this article helps companies that are considering this move to realize that it is possible to do it without revolutionizing your code base.

Moving to a Micro Front End approach is a move in the right direction, as applications get bigger, velocity gets smaller.

This article shows a solution using Angular as a framework, similar solutions can be achieved using other frameworks.