Leader Election with Zookeeper

Recently we had to implement an active-passive redundancy of a singleton service in our production environment where the general rule is always have “more than one of anything”. The main motivation is to alleviate the need to manually monitor and manage these services, whose presence is crucial to the overall health of the site.

This means that we sometimes have a service installed on several machines for redundancy, but only one of the is active at any given moment. If the active services goes down for some reason, another service rises to do its work. This is actually called leader election. One of the most prominent open source implementation facilitating the process of leader election is Zookeeper. So what is Zookeeper?

Originally developed by Yahoo research, Zookeepr acts as a service providing reliable distributed coordination. It is highly concurrent, very fast and suitable mainly for read-heavy access patterns. Reads can be done against any node of a Zookeeper cluster while writes a quorum-based. To reach a quorum, Zookeeper utilizes an atomic broadcast protocol. So how does it work?

Connectivity, State and Sessions

Zookeeper maintains an active connection with all its clients using a heartbeat mechanism. Furthermore, Zookeeper keeps a session for each active client that is connected to it. When a client is disconnected from Zookeeper for more than a specified timeout the session expires. This means that Zookeeper has a pretty good picture of all the animals in its zoo.

Data Model

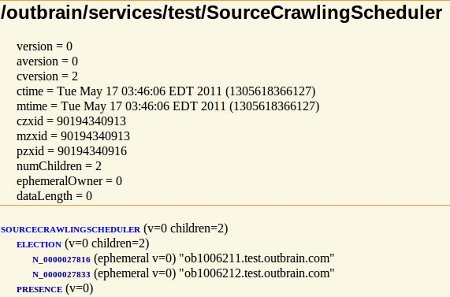

The Zookeeper data model consists of a hierarchy of nodes, called ZNodes. ZNodes can hold a relatively small (efficiency is key here) amount of data, they are versioned and timestamped . There are several properties a ZNode can have that make them particularly useful for different use cases. Each node in Zookeeper can have the persistent, ephemeral and sequential flags. These determine the naming of the node and its behavior with respect to the client session.

- The persistent node is basically a managed data bin

- The ephemeral node exists for the lifetime of its client session

- The sequential node, when created, gets a unique number (sequence) suffixed to its name

The latter two provide the means to implementing a variety of distribution tasks such as locks, queues, barriers, transactions, elections and other synchronization related tasks.

Here’s what an election path looks like in the Solr Cloud Admin Console:

Events

Zookeeper allows its clients to watch for different events in its node hierarchy. This way clients can get notified of different changes in the distributed state of affairs and act accordingly. These watches are one timers and should be persisted again by the client after notification. The client is also responsible of handling session expiration which means that ephemeral nodes should be re-persisted after an expiration.

Client Implementation

Zookeeper requires a lot of boilerplate code, mostly around connectivity and for the majority of the time you will be doing the same things over and over. Luckily Stefan Groschupf and Patrick Hunt wrote a client abstraction called ZkClient. I published a maven artifact for this on OSS so it’s available to our build system. The library also provides a persistent event notification mechanism in the form of listeners.

The next thing to do was to cook up a Spring factory bean for ZkClient and a template style class to act as an abstraction layer to Zookeeper operations. This ties in nicely into the Spring container which we use extensively:

[gist id=1073533 file=ZkClient.xml]

The ZooKeeperClientStatsCollector is a listener implementation which collects stats about session connects/disconnects, exported to JMX as an MBean.

Now that we have a working data access layer we can start with the good stuff.

Leader Election

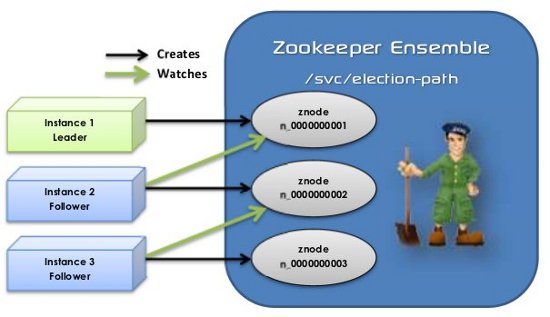

The Zookeeper documentation describes in general terms how leader election is to be performed. The general idea is that all participants of the election process create an ephemeral-sequential node on the same election path. The node with the smallest sequence number is the leader. Each “follower” node listens to the node with the next lower sequence number to prevent a herding effect when the leader goes away. In effect, this creates a linked list of nodes. When a node’s local leader dies it goes to election either find a smaller node or becoming the leader if it has the lowest sequence number.

The following image describes a scenario with 3 clients participating in the election process:

Each client participating in this process has to:

- Create an ephemeral-sequential node to participate under the election path

- Find its leader and follow (watch) it

- Upon leader removal go to election and find a new leader, or become the leader if no leader is to be found

- Upon session expiration check the election state and go to election if needed

One thing to consider here is the nature of the work being done by the leader. Make sure its state can be preserved if its leadership is revoked. Leader loss could be caused by any number of reasons including initiated restarts due to maintenance and releases. It could also be brought about by network partitioning.

Designing services for graceful recovery is a requirement for distributed systems not leader election.

Spring helps here because interception can be used to suppress method invocations of various services based on leadership status. Below is an example of an interception based leadership control:

[gist id=1073533 file=LeaderElectionInterceptor.xml]

The service myService is the one controlled by leader election, all its method are going to be suppressed or invoked based on leadership status.

Another implementation uses a quartz scheduler instance as its target:

[gist id=1073533 file=LeaderElectionQuartzScheduler.xml]

This implementation puts a quartz scheduler on standby mode when leadership is revoked and resumes it when it’s granted (notice it will not actually stop running tasks, this will be allowed their natural completion, so in effect you may have a scheduled task running on two services due to partitioning scenarios. This means that whatever is scheduled has to be aware of another service possibly doing the same work. This problem can be easily solved with a Zookeeper barrier implementation, more on that in another post.

But there’s more than leader election you could do with Zookeeper

If you wish to run your data center the democratic way, where important decisions are made in coordination with other stakeholders, Zookeeper certainly helps.

Leader Election with Spring is on GitHub, Source shown in this post can be found on Gist.

Zookeeper documentation and wiki.

Happy Zookeeping!