Hadoop Research Journey from Bare Metal to Google Cloud – Episode 1

Outbrain is the world’s leading discovery platform, serving over 250 billion personal recommendations per month. In order to provide premium recommendations at such a scale, we leverage capabilities in analyzing a large amount of data. We use a variety of data stores and technologies such as MySql, Cassandra, Elasticsearch, and Vertica, however in this post trilogy (all things can be split to 3…) I would like to focus on our Hadoop eco-system and our journey from pure bare metal into a hybrid cloud solution.

The bare metal period

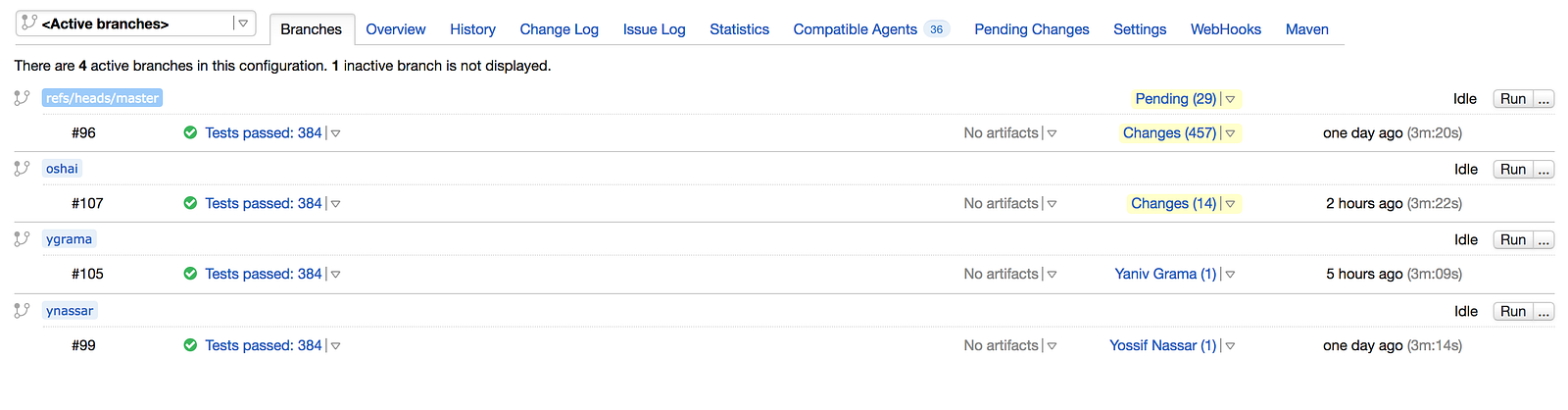

In a nutshell, we keep two flavors of Hadoop clusters:

- Online clusters, used for online serving activities. Those clusters are relatively small (2 PB of data per cluster) and are kept in our datacenters on bare metal clusters, as part of our serving infrastructure.

- Research cluster, surprisingly, used mainly for research and offline activities. This cluster keeps large amount of data (6 PB), and by nature the workload on this cluster is elastic. Most of the time it was not utilized, but there were times of peaks when there was a need to query huge amount of data.

History lesson

Before we move forward in our tale, it may be worthwhile to spend a few words about the history.

We first started to use the Hadoop technology at Outbrain over 6 years ago – starting as a technical small experiment. As our business rapidly grow, so did the data, and the clusters were adjusted in size, however a tech debt had been built up around it. We continued to grow the clusters, based on scale out methodology, and after some time, found ourselves with clusters running old Hadoop version, not being able to support new technologies, build from hundreds of servers, some of which are very old.

We decided we need to stop being fire fighters, and to get super proactive about the issue. We first took care of the Online clusters, and migrated them to a new in-house bare metal solution (you can read more about on this in the Migrating Elephants post on Outbrain Tech Blog site)

Now it was time to move forward and deal with our Research cluster.

Research cluster starting point

Our starting point for the Research cluster was a cluster build out of 500 servers, holding about 6 PB of data, running CDH4 community version.

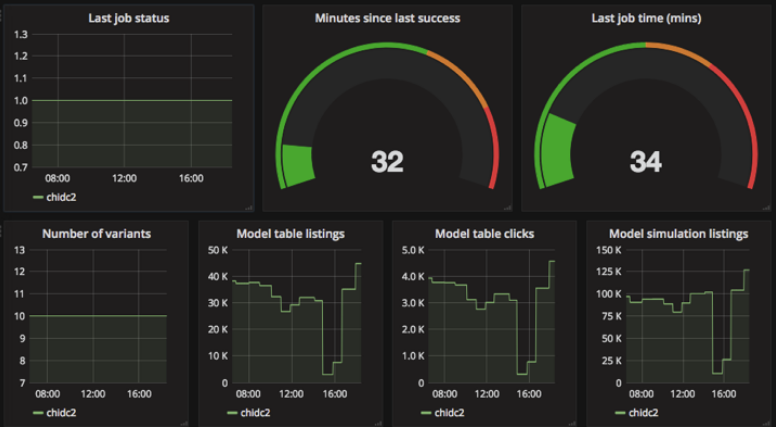

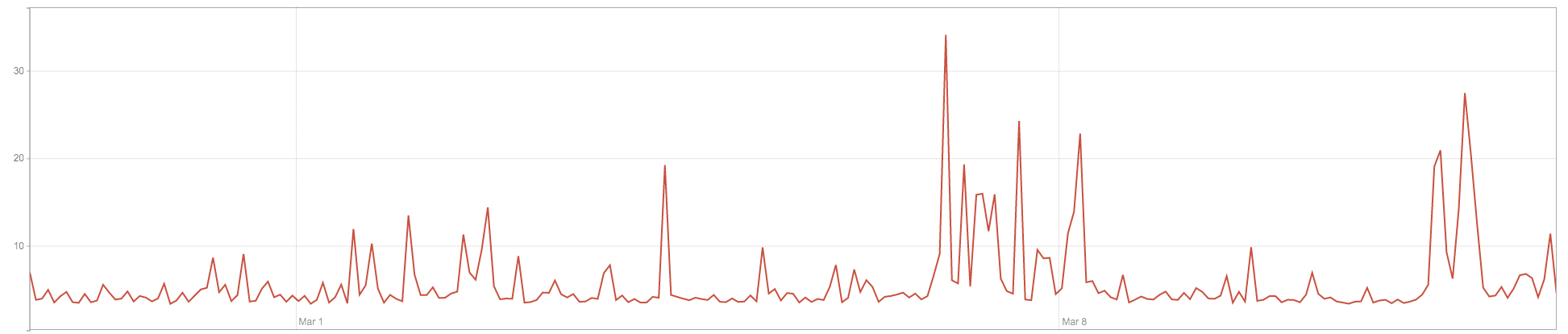

As mentioned before, the workload on this cluster is elastic – at times, requires a lot of compute power and most of the time fairly under utilized (see graph below).

This graph shows the CPU utilization for 2 weeks, as it seen the usage is not constant, most of the time is barely used, with some periodic peaks

The cluster was unable to support new technologies (such as SPARK and ORC), which were already in use with the Online clusters, reducing our ability to use it for real research.

On top of that, some of the servers in this cluster were becoming very old, and as we grow the cluster on the fly, its storage:CPU:RAM ratio was suboptimal, causing us to waste expensive foot print in our datacenter.

On top of all of the above, it caused so much frustration to the team!

We mapped our options moving forward:

- Do in-place upgrade to the Research cluster software

- Rebuild the research cluster from scratch on bare metal in our datacenters (similar to the project we did with the Online clusters)

- Leverage cloud technologies and migrate the research cluster to the Cloud.

The dilemma

Option #1 was dropped immediately since it answered only a fraction of our frustration at best. It did not address the old hardware issues, and it did not address our concerned regarding non optimal storage:CPU:RAM ratios – which we understood would only get worse when we come to use RAM intensive technologies such as SPARK.

We had a dilemma between option #2 and option #3, both viable options with pros and cons.

Building the Research cluster in house was a project we were very familiar with (we just finished our Online clusters migration), our users were very familiar with the technology, so no learning curve on this front. On the other hand, it required a big financial investment, and we were unable to leverage the elasticity to the extent we wanted.

Migrating to the cloud answered our elasticity needs, however presented a non-predictable cost model (something very important to the finance guys), and had many unknowns as it was new for us, and for the users that would need to work with the environment. It was clear that learning and education will be needed, but it was not clear as to how steep this learning curve would be.

On top of that, we knew that we must have full compatibility between the Research cluster and the Online cluster, but it was hard for us to estimate the effort required to get there, and the number of processes that require data transition between the clusters.

So, what do we do when we don’t know which option is better?

We study and experiment! And this is how we entered the 2nd period – the POC.

You are invited to read about the POC we did and how we did it on our next episode of “Hadoop Research Journey from Bare Metal to Google Cloud – Episode 2”.