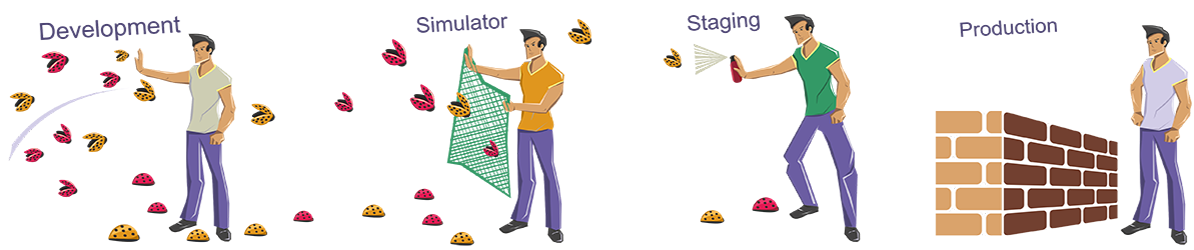

Keep bugs out of production

Production bugs are painful and can severely impact a dev team’s velocity. My team at Outbrain has succeeded in implementing a work process that enables us to send new features to production free of bugs, a process that incorporates automated functions with team discipline.

Why should I even care?

Bugs happen all the time – and they will be found locally or in production. But the main difference between preventing and finding the bug in a pre-production environment is the cost: according to IBM’s research, fixing a bug in production can cost X5 times more than discovering it in pre-production environments (during the design, local development, or test phase).

Let’s describe one of the scenarios happen once a bug reaches production:

- A customer finds the bug and alerts customer service.

- The bug is logged by the production team.

- The developer gets the description of the bug, opens the spec, and spends time reading it over.

- The developer then will spend time recreating the bug.

- The developer must then reacquaint him/herself with the code to debug it.

- Next, the fix must undergo tests.

- The fix is then built and deployed in other environments.

- Finally, the fix goes through QA testing (requiring QA resources).

How to stop bugs from reaching production

To catch and fix bugs at the most time-and-cost efficient stage, we follow these steps, adhering to the several core principles:

Stage 1 – Local Environment and CI

Step 1: Design well. Keep it simple.

Create the design before coding: try to divide difficult problems into smaller parts/steps/modules that you can tackle one by one, thinking of objects with well-defined responsibilities. Share the plans with your teammates at design-review meetings. Good design is a key to reducing bugs and improving code quality.

Step 2: Start Coding

The code should be readable and simple. Design and development principles are your best friends. Use SOLID, DRY, YAGNI, KISS and Polymorphism to implement your code.

Unit tests are part of the development process. We use them to test individual code units and ensure that the unit is logically correct.

Unit tests are written and executed by developers. Most of the time we use JUnit as our testing framework.

Step 3: Use code analysis tools

To help ensure and maintain the quality of our code, we use several automated code-analysis tools:

FindBugs – A static code analysis tool that detects possible bugs in Java programs, helping us to improve the correctness of our code.

Checkstyle – Checkstyle is a development tool to help programmers write Java code that adheres to a coding standard. It automates the process of checking Java code.

Step 4: Perform code reviews

We all know that code reviews are important. There are many best practices online (see 7 Ways to Up-Level Your Code Review Skills, Best Practices for Peer Code Review, and Effective Code Reviews), so let’s focus on the tools we use. All of our code commits are populated to ReviewBoard, and developers can review the committed code, see at any point in time the latest developments, and share input.

For the more crucial teams, we have a build that makes sure every commit has passed a code review – in the case that a review has not be done, the build will alert the team that there was an unreviewed change.

Regardless of whether you are performing a post-commit, a pull request, or a pre-commit review, you should always aim to check and review what’s being inserted into your codebase.

Step 5: CI

This is where all code is being integrated. We use TeamCity to enforce our code standards and correctness by running unit tests, FindBugs validations Checkstyle rules and other types of policies.

Stage 2 – Testing Environment

Step 1: Run integration tests

Check if the system as a whole work. Integration testing is also done by developers, but rather than testing individual components, it aims to test across components. A system consists of many separate components like code, database, web servers, etc.

Integration tests are able to spot issues like wiring of components, network access, database issues, etc. We use Jenkins and TeamCity to run CI tests.

Step 2: Run functional tests

Check that each feature is implemented correctly by comparing the results for a given input with the specification. Typically, this is not done at the development level.

Test cases are written based on the specification, and the actual results are compared with the expected results. We run functional tests using Selenium and Protractor for UI testing and Junit for API testing.

Stage 3 – Staging Environment

This environment is often referred to as a pre-production sandbox, a system testing area, or simply a staging area. Its purpose is to provide an environment that simulates your actual production environment as closely as possible so you can test your application in conjunction with other applications.

Move a small percentage of real production requests to the staging environment where QA tests the features.

Stage 4 – Production Environment

Step 1: Deploy gradually

Deployment is a process that delivers our code into production machines. If some errors occurred during deployment, our Continuous Delivery system will pause the deployment, preventing the problematic version to reach all the machines, and allow us to roll back quickly.

Step 2: Incorporate feature flags

All our new components are released with feature flags, which basically serve to control the full lifecycle of our features. Feature flags allow us to manage components and compartmentalize risk.

Step 3: Release gradually

There are two ways to make our release gradual:

- We test new features on a small set of users before releasing to everyone.

- Open the feature initially to, say, 10% of our customers, then 30%, then 50%, and then 100%.

Both methods allow us to monitor and track problematic scenarios in our systems.

Step 4: Monitor and Alerts

We use the ELK stack consisting of Elasticsearch, Logstash, and Kibana to manage our logs and events data.

For Time Series Data we use Prometheus as the metric storage and alerting engine.

Each developer can set up his own metrics and build grafana dashboards.

Setting the alerts is also part of the developer’s work and it is his responsibility to tune the threshold for triggering the PagerDuty alert.

PagerDuty is an automated call, texting, and email service, which escalates notifications between responsible parties to ensure the issues are addressed by the right people at the right time.

All in All,

Don’t let the bugs fly out of control.