Introduction

One of the core functionalities in Outbrain’s solution is our crawling system.

The crawler fetches web pages (e.g. articles), and index them in our database.

The crawlers can be divided into several high-level steps:

- Fetch – download the page

- Resolve – identify the page context (e.g. what is the domain?)

- Extract – try to extract features out of the HTML – like title, image, description, content etc.

- NLP – run NLP algorithms using the extracted features, in order to classify and categorize the page.

- Save – store to the DB.

The old implementation

The crawler module was one of the first modules that the first Outbrainers had to implement, back in Outbrain’s small-start-up days 6-7 years ago.

In January 2015 we have decided that it was time to sunset the old crawlers, and rewrite everything from scratch. The main motivations for this decision were:

- Crawlers were implemented as a long monolith, without clear steps (OOP as opposed to FP).

- Crawlers were implemented as a library that was used in many different services, according to the use-case. Each change forced us to build and check all services.

- Technologies are not up-to-date anymore (Jetty vs. Netty, sync vs. async development etc.).

The new implementation

When designing the new architecture of our crawlers, we tried to follow the following ideas:

- Simple step-by-step (workflow) architecture, simplifying the flow as much as possible.

- Split complex logic to micro-services for easier scale and debug.

- Use async flows with queues when possible, to control bursts better.

- Use newer technologies like Netty and Kafka.

Our first decision was to split the main flow into 3 services. Since the crawler flow, like many others, is basically an ETL (Extract, Transform & Load) – we have decided to split the main flow into 3 different services:

- Extract – fetch the HTML and resolve the domain.

- Transform – take features out of the HTML (title, images, content…) + run NLP algorithms.

- Load – save to the DB.

The implementation of those services is based on “workflow” ideas. We created interface to implement a step, and each service contains several steps, each step doing a single and simple calculation. For example, some of the steps in the “Transform” service are:

- TitleExtraction

- DescriptionExtraction

- ImageExtraction

- ContentExtraction

- Categorization

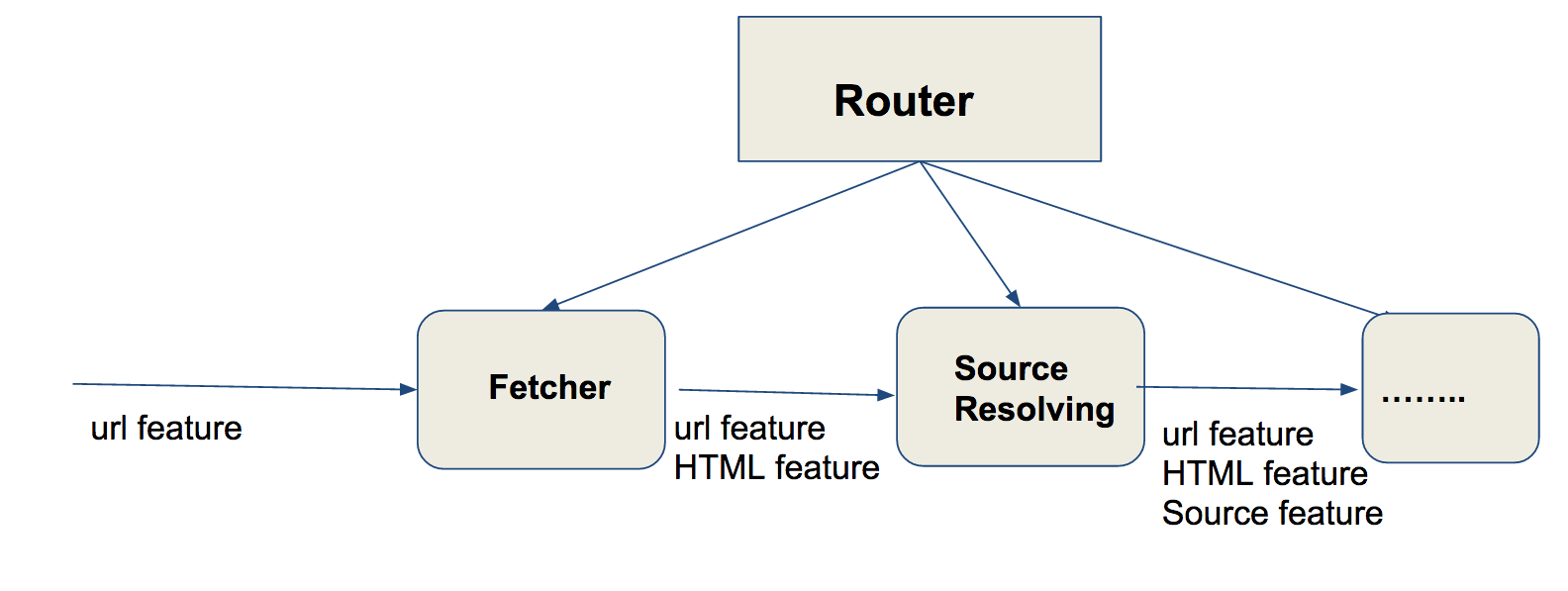

In addition, we have implemented a class called Router – that is injected with all the steps it needs to run, and is in charge of running them one after the other, reporting errors and skipping unnecessary steps (for example, no need to run categorization when content extraction failed).

Furthermore, every logic that was a bit complex was extracted out of those services to a dedicated micro-service. For example, the fetch part (download the page from the web) was extracted to a different micro-service. This helped us encapsulate fallback logic (between different http clients) and some other related logics we had outside of the main flow. This is also very helpful when we want to debug – we just make an API call to that service to get the same result the main flow gets.

We modeled each piece of data we extracted out of the page into features, so each page would eventually translated into a list of features:

- URL

- TItle

- Description

- Image

- Author

- Publish Date

- Categories

The data flow in those services was very simple. Each step got all the features that were created up to its run, and added (if needed) one or more features to its output. That way the features list (starting with only URL) got “inflated” going over all the steps, reaching the “Load” part with all the features we need to save.

The migration

One of the most painful parts of such rewrites is the migration. Since this is a very important core-functionality in Outbrain, we could not just change it and cross fingers that everything is OK. In addition, it took several months to build this new flow, and we wanted to test as we go – in production – and not wait until we are done.

The main concept for the migration was to create this new flow side by side with the old flow, having them both run in the same time in production, allowing us to test the new flow without harming production.

The main steps of the migration were:

- Create the new services and start implementing some of the functionality. Do not save anything in the end.

- Start calls from the old-flow to the new one, a-synchronically, passing all features that the old flow calculated.

- Each step in the new flow that we implement can compare its results to the old-flow results, and report when the results are different.

- Implement the “save” part – but do it only for a small part of the pages – control it by a setting.

- Evaluate the new-flow using the comparison done between the old and new flows results.

- Gradually enable the new-flow for more and more pages – monitoring the effect in production.

- Once feeling comfortable enough, remove the old-flow and run everything only in the new-flow.

The approach can be described as “TDD” in production. We have created a skeleton for the new-flow and started streaming the crawls into it, while actually, it does almost nothing. We have started writing the functionality – each one tested, in production, compared to the old-flow. Once all steps were done and tested, we have replaced the old-flow by the new one.

Where we are now

As of December 20th 2016 we are running only the new flow for 100% of the traffic.

The changes we already see:

- Throughput/Scale: we have increased the throughput (#crawls per minute) from 2K to ~15K.

- Simplicity: time to add new features or solve bugs decreased dramatically. There is no good KPI here but most bugs are solved in 1-2 hours, including production integration.

- Fewer production issues: it is easier for the QA to understand and even debug the flow (using calls to the micro services) – so some of the issues are eliminated even be getting to developers.

- Bursts handling: due to the queues architecture we endure bursts much better. It also allows simple recovery after one of the services is down (maintenance for example).

- Better auditing: thanks to the workflow architecture, it was very easy to add audit message using our ELK infrastructure (Elastic search, Log-stash, Kibana). The crawling flow today reports the outcome (and issues) of every step it does, allowing us, the QA, and even the field guys to understand the crawl in details without the need of a developer.

Great Work! Loved the explanation of development and moving to production stages